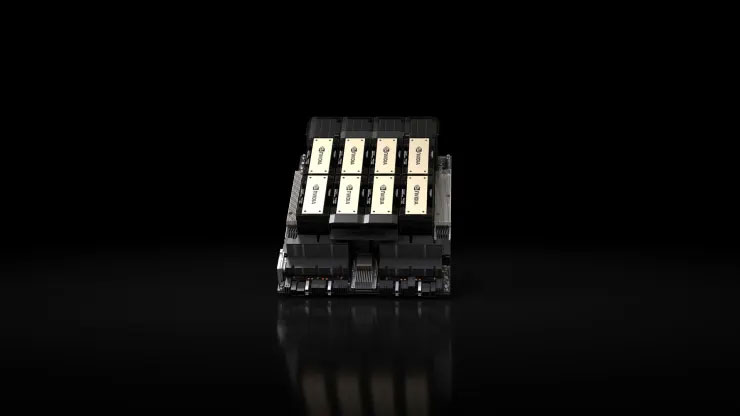

Nvidia launched the H200 on November 13, 2023, a cutting-edge graphics processing unit (GPU) tailored for training and deploying the advanced artificial intelligence models driving the current generative AI revolution.The new GPU is an upgrade from its predecessor, the H100, which OpenAI uses to train its most complex language model, GPT-4.The limited supply of these chips has sparked fierce competition among large companies, startups and government agencies.Nvidia H200 GPU.webp H100 chips cost between $25,000 and $40,000, according to Raymond James estimates, and large numbers of these chips must be combined to facilitate the training of the largest models, a process called "training."The impact of Nvidia's AI GPUs on the market has led to a significant surge in the company's stock price, up more than 230% so far in 2023.Nvidia expects third-quarter revenue to be approximately $16 billion, an increase of 170% compared with the previous year.

The H200 achieves significant advancements by integrating 141GB of cutting-edge "HBM3" memory, enhancing the chip's performance during the "inference" process.Inference involves deploying a trained model to generate text, images, or predictions.Nvidia claims the H200 can generate output nearly twice as fast as its H100 predecessor, and testing using Meta's Llama 2 LLM proves this.The H200 will be released in the second quarter of 2024 and will compete with AMD's MI300X GPU, which has similar attributes and also has enhanced memory capabilities to accommodate large inference models.A significant advantage of the H200 is its compatibility with the H100, allowing AI companies currently using older models to seamlessly integrate the new version without the need for changes to server systems or software.The H200 can be integrated into four- or eight-GPU server configurations via Nvidia's HGX complete systems, and there's also a chip called the GH200 that combines the H200 GPU with an Arm-based processor.However, the H200's dominance as Nvidia's fastest AI chip may be temporary.While Nvidia offers a variety of chip configurations, major advancements typically occur every two years when manufacturers adopt new architectures, unlocking significant performance gains beyond mere memory increases or other minor optimizations.Both the H100 and H200 are based on Nvidia's Hopper architecture.In October, Nvidia told investors it would transition to a one-year release model instead of a two-year architecture cadence due to high demand for its GPUs.The company shared a slide that hints at a 2024 announcement and launch of a BlackW-based Nvidia launched the H200 on November 13, 2023, a cutting-edge graphics processing unit (GPU) tailored for training and deploying the advanced artificial intelligence models driving the current generative AI revolution.The new GPU is an upgrade from its predecessor, the H100, which OpenAI uses to train its most complex language model, GPT-4.The limited supply of these chips has sparked fierce competition among large companies, startups and government agencies.Nvidia H200 GPU.webp H100 chips cost between $25,000 and $40,000, according to Raymond James estimates, and large numbers of these chips must be combined to facilitate the training of the largest models, a process called "training."The impact of Nvidia's AI GPUs on the market has led to a significant surge in the company's stock price, up more than 230% so far in 2023.Nvidia expects third-quarter revenue to be approximately $16 billion, an increase of 170% compared with the previous year.The H200 achieves significant advancements by integrating 141GB of cutting-edge "HBM3" memory, enhancing the chip's performance during the "inference" process.Inference involves deploying a trained model to generate text, images